Hey,

Having spent a good part of my life working in computers, I’ve spent a few decades watching the advent of things like VR, AR, and now, AI.

I’ve not been surprised to see the artificial intelligence crowd try to create an AI therapist. It’s a very old idea, actually! I remember interacting with ELIZA back in high school and college. ELIZA was a program that basically acted as a reflective, Rogerian therapist.

It was very basic, and not very useful, but in the days of BASIC programming and Radio Shack TRS-80’s, it was fun to play with.

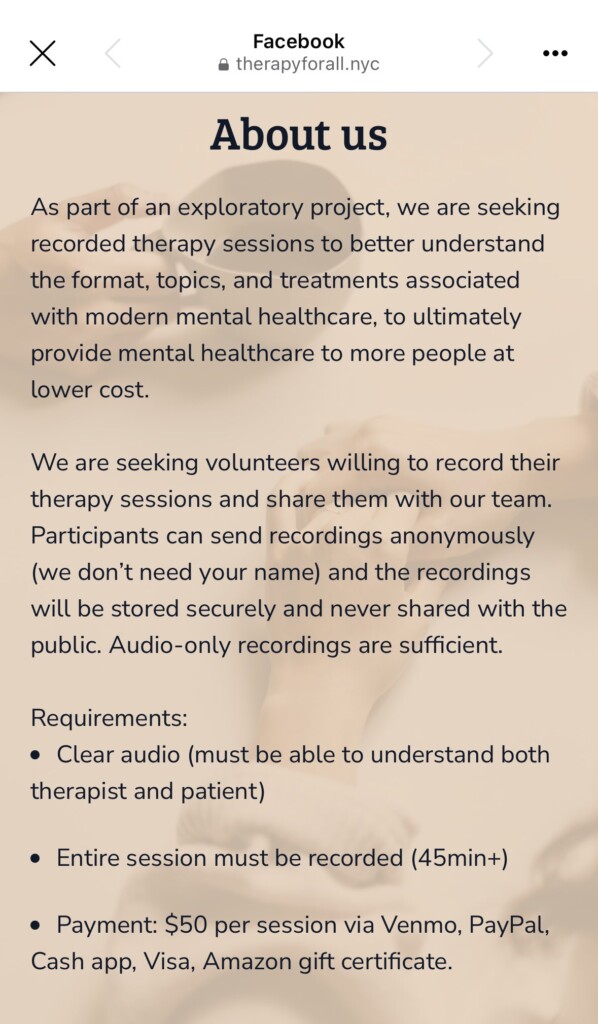

One of the latest entrants into the AI therapy market seems to be a group in NYC, therapyforall.nyc.

They raised a bit of a firestorm in therapist circles by offering $50 to people to record and send them their therapy sessions. That part is not so bad, but they directly stated you did not need to ask/get your therapist’s consent, other than in states where recording others is not legal.

At first, I was outraged, like many other therapists, about this breach of privacy and trust in the therapeutic relationship from recording a session without it being discussed. I still am quite negative about this. BUT, I came to a much bigger concern as I thought through the situation.

AI’s are only as good as the information they are trained on. They become biased by it. Just like a child is influenced by his parents. Just like parents are influenced by the news they watch each day.

And I thought: the sessions that THIS AI would receive – they would not be normal, average, good therapy sessions. They would be stilted and poisoned in subtle ways.

- You have a therapy client willing to turn over his/her most intimate thoughts and emotions for $50. Willing to risk privacy over that amount of money. Willing to risk their relationship with the therapist over that.

- You have a therapy client, if recording is not discussed or consented to, who is willing to do that to a person they are supposed to be intimate with, honest with, open with.

- You have a session where a secret is being held. One side knows. The other doesn’t. But trust me, we FEEL these things as therapists. They bring an odd feeling into the room that something is “off”.

- You have a session where the client knows it’s all going to be recorded, so they will likely self-censor, consciously or unconsciously.

I cannot tell you what the exact results of this training would be on an AI therapist, but I can tell you that these are all things that would have negative impacts on a therapy session. And the “therapy” provided by that AI would be molded by all this. Not good.